Blog post written by László Hunyadi and Tamás Váradi, edited by Darja Fišer and Jakob Lenardič

The idea of building a multimodal corpus of Hungarian (containing annotation of text, prosody, gaze, gesture etc.) was conceived 10 years ago. The aim was to improve human-machine communication applications (like chatbots) by empowering them with a comprehensive set of knowledge about human-human communicative behaviour. The underlying assumption was that there exist certain primitives of human behaviour. Such behavioural primitives form temporal patterns which can be assigned functional interpretations. For instance, a prosodic feature like falling intonation followed by a visual cue such as a downward gaze often signal that the speaker wishes to terminate his or her turn in conversation. Such primitives can further serve as a marker which, with a certain probability, points to a pattern with a given interpretation.

When building of the corpus we first observed and annotated primitives of behaviour at multiple levels, which included recording intonation, morpho-syntactic annotation, video annotation, unimodal and multimodal pragmatic annotation, among others. Subsequently, we interpreted the complex raw annotation phenomena in terms of pragmatic and communicative function and finally, identifying actual patterns of behaviour based on the annotated raw and interpreted data. In total, about 50 hours of dialogues with 111 subjects were recorded in two (formal and informal) scenarios. HunCLARIN experts captured the multimodality of human-human communication by observing a wide range of both non-verbal and verbal behaviour. The primitives of non-verbal behaviour were either visual or audio in nature. The visual primitives included eye gaze (direction and blink), eyebrows, head, hand and (upper) body movement, perceived emotions and a range of pragmatic and communicative categories (such as turn management, agreement, certainty etc.) The non-verbal audio primitives included a range of prosodic features, perceived emotions and a range of pragmatic and communicative categories.

Annotation of the HuComTech corpus with the ELAN tool.

The annotation of verbal primitives was aimed at offering the first of its kind of syntactic analysis of spoken language. This was partly done by using another Hungarian CLARIN tool magyarlanc while the German CLARIN webmaus tool was used for the alignment of words on the timeline. The specific features of the HuComTech Corpus include its unique conception of multimodality, which actually represents the synthesis of three approaches: the annotation of primitives and functions both based on visual observation alone, the same kind of annotation based only on audio observation, and genuine multimodality based on audio and visual clues together. This threefold distinction of primitives within multimodality allows for capturing behavioural patterns at three levels (vision, audio, and their joint complexity), facilitating the building of two-way communication systems.The corpus has also been successfully used in linguistic research; for instance, Hunyadi (2019) used HuComTech to study the multimodal expression of agreement and disagreement, while Szekrényes (2019) presented an approach to the post-processing of temporal patterns based on the multimodal data in the corpus.

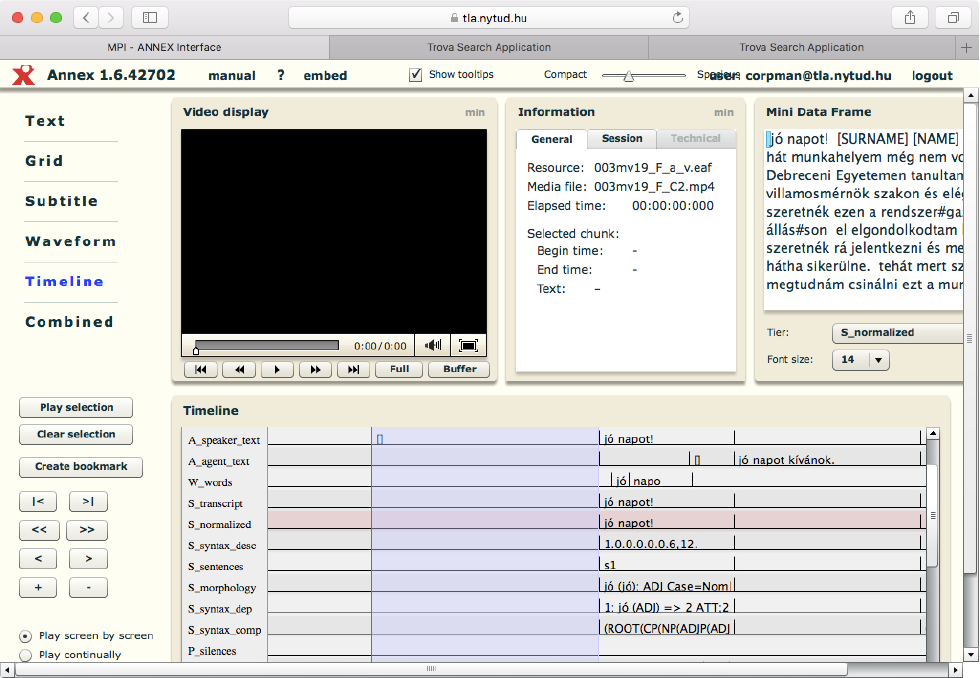

Browsing the corpus at the HUN-CLARIN repository tla.nytud.hu with the Annex tool.

For more information about the HuComTech corpus see Hunyadi et al. (2018) in the CLARIN2018 Conference proceedings (pp. 6-11). The corpus is available at http://tla.nytud.hu.

Click here to read more about Tour de CLARIN